a little Gaussian problem

Here’s a problem I heard last month that I’ve been meaning to write up.

We know that if two random variables X and Y are jointly Gaussian, then X and Y are each Gaussian. The converse is not true, even if X and Y are uncorrelated — we have examples of this. What if one more condition is added?

Assume the following:

- X and Y each distributed as \(\mathcal{N}(0, 1)\)

- X and Y are uncorrelated

- X+Y is distributed as \(\mathcal{N}(0, 2)\)

Is X and Y jointly Gaussian or, equivalently, are X and Y independent (because uncorrelation implies independence for a pair of jointly Gaussian random variables)?

The necessary and sufficient condition for X and Y to be jointly Gaussian is for every non-trivial linear combination of X and Y to be Gaussian, so unless (3) somehow makes that true, X and Y would not be jointly Gaussian.

Not surprisingly, the answer is no. The difficult part is constructing a counter-example, where X and Y are uncorrelated but not independent, individually Gaussian, and X+Y is also Gaussian.

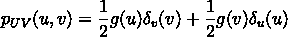

Suppose we have a prototype density function \(g(t)\). Now define random variables U and V by the joint density

where we use the Dirac delta to represent discrete probabilities.

Define X and Y by a unitary transformation of U and V (actually a rotation by 22.5 degrees):

\(\left[\begin{array}{c}

X \\ Y

\end{array}\right] = \overset{A}{\overbrace{

\left[\begin{array}{cc}

\cos \frac{\pi}{8} & -\sin \frac{\pi}{8} \\

\sin \frac{\pi}{8} & \cos \frac{\pi}{8}

\end{array}\right]}}

\left[\begin{array}{c}

U \\ V

\end{array}\right]

\)

Let us note that, obviously, X and Y are not independent (and not jointly Gaussian), but they are uncorrelated, because of quadrantal symmetry — wherever there is a positive density at (x, y), the same is at (-y, x), (-x, -y), and (y, -x).

The rotation by 22.5 degrees is also important to ensure a kind of marginalization symmetry since in addition to marginalizing along the 0-degree and 90-degree axes, assumption (3) requires a marginalization along the 45-degree axis.

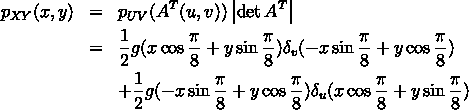

By change of variables, we have

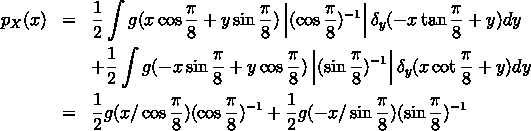

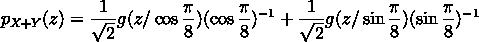

Next we find \(p_X\), \(p_Y\), and \(p_{X+Y}\). We can do this formally (or even by inspection):

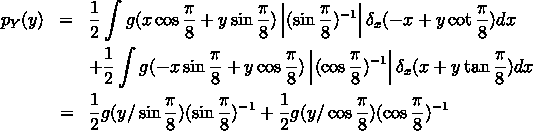

Similarly,

and since

\(\left[\begin{array}{c}X+Y \\ -X+Y

\end{array}\right] =

\left[\begin{array}{cc}

1 & 1 \\

-1 & 1

\end{array}\right] A

\left[\begin{array}{c}

U \\ V

\end{array}\right] = \sqrt{2}

\left[\begin{array}{cc}

\cos \left(\frac{\pi}{8} - \frac{\pi}{4}\right) & -\sin \left(\frac{\pi}{8} - \frac{\pi}{4}\right) \\

\sin \left(\frac{\pi}{8} - \frac{\pi}{4}\right) & \cos \left(\frac{\pi}{8} - \frac{\pi}{4}\right)

\end{array}\right]

\left[\begin{array}{c}

U \\ V

\end{array}\right]

\)

which is a (scaled) rotation by -22.5 degrees, we immediately have

If we look at the marginal densities, we see that they all have the same form or shape, namely \(\alpha g(\alpha t) + \beta g(\pm \beta t)\). Suppose we impose symmetry on \(g(t)\), then the problem becomes one of finding a \(g(t)\) such that \(\alpha g(\alpha t) + \beta g(\beta t)\) is the right shape (a Gaussian), i.e. proportional to \(h(t) = \exp(-t^2)\).

This is not too hard. Let \(k = |\beta / \alpha|\) (which we assume to be >1, w.l.o.g.). Then define \(g(t)\) arbitrarily on any interval \((\gamma, k\gamma]\). That defines \(g(t)\) for all \(t>0\) recursively as follows:

\(\because g(t) + kg(kt) = h(t/\alpha) / \alpha\)\(\therefore g(kt) = h(t/\alpha) / (k\alpha) – g(t)/k\) and

\(g(t/k) = h(t/(k\alpha)) / \alpha – kg(t)\)

Finally, define \(g(0) = h(0)/2\), \(g(-t) = g(t)\), and then scale it appropriately. QED.

Note that \(g(t)\) is by no means a “nice” function, but that is no issue for this problem.

What generalizations are possible is unclear. What if we ask for more linear combinations of X and Y to be Gaussian? My guess is X and Y should still not be necessarily Gaussian, but counter-examples may be harder to come by. It would be interesting to know whether a finite number of linear combination constraints would ever result in joint Gaussianity, or whether the sums of a small number of prototype functions is enough to generate a counter-example.