latency arbitrage

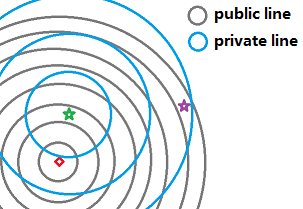

Michael Lewis has been in the news for his new book, Flash Boys, decrying the problems brought about by a system ill equipped to deal with high frequency trading. The core problem can be stylistically summarized by this picture:

Michael Lewis has been in the news for his new book, Flash Boys, decrying the problems brought about by a system ill equipped to deal with high frequency trading. The core problem can be stylistically summarized by this picture:

I place an order from the location of the red square to the green and purple exchanges on which trades occur. My communication capability on the gray “public” channel is slower than the communication capability of some competing agent on the blue “private” channel. Therefore, triangle inequality notwithstanding, the competing agent observes my actions at the green exchange and reacts at the purple exchange before my order arrives there. It appears to me exactly like I have been scooped by somebody acting anti-causally, so what happened?

Well, somebody (the competing agent) did see the future, in some sense. Simultaneity does not exist at this time scale, just like in special relativity. The competing agent is simply taking advantage of this fact.

This is of course old news, but it’s nevertheless interesting to hear the two perspectives — one that considers this as front-running and one that considers this as fair competition. After all, all that the competing agent had was a more capable channel, which no one is prohibited from obtaining. This “difference of opinion” is of course nothing of the sort, but rather the symptom of a much deeper problem.

Consider this question: Do we assume there is a single market on which all trades occur? The SEC certainly did when it promulgated Regulation NMS, which among other things blithely assumed the existence of a “National Best Bid-Offer” (NBBO), basically a market-wide best price among numerous exchanges. In the days when communication delays were short compared to the interval between market transitions (i.e. low-frequency trading), there was indeed a single market. But when communication delays are now long compared to the interval between market transitions (i.e. high-frequency trading), the assumption breaks down. The exchanges, if they are some distances apart, represent different partial markets, each with a local price. There is no way at all to pretend there is a single market with a single price, despite the frantic latency arbitrages that high frequency strategies employ to synchronize prices across exchanges. (Yes, they are actually performing this service.) It’s futile. There will always be this inefficiency to exploit, and it is an inherent frictional cost of this kind of market structure, to speak nothing of the information asymmetry caused merely by different locations of agents and their communication capabilities. In other words: bad system design; bad, because nobody actually “designed” it and probably nobody thinks of it as one “system.”

So let’s recap. It seems that there are several problems with today’s trading system. Due to the improvement in technology, many assumptions that were approximately correct, such as the existence of a law of one price and simultaneity, are no longer valid. In other words, a single NBBO is not even well defined. Instead we have point-to-point information propagation that cross at certain geographic midpoints.

What to do? Actually, this problem has been solved before. As logic components in computer systems became faster, propagation delay became important and if it weren’t for clocks, systems would enter ambiguous states and become unstable. The solution was latched clocking: allow state changes only at quantized intervals of time, and in the intervening time, the changes would have the opportunity to propagate across the entire system so that the overall system state was once again consistent. The same thing works for trading markets, i.e. allow the market state (trades and incoming orders) to update only at quantized intervals of time, and allow enough time to pass between for these information to be received by all exchanges forming the same market. The goal is to get back to the regime where communication delays are short compared to the interval between market transitions.

Elaine Wah of the University of Michigan has a paper on this, with analysis showing the actual financial benefits of removing the inefficiency inherent in continuous trading. Generally, market performance should improve when we clock at speeds relevant to the underlying information generating and decision feedback processes. Any faster and we are introducing noise and also by not allowing signals (trading interests) to fully mix into a steady state, end up introducing large transients (volatility, worse executions) into the system.

This besides, it is great to see EECS getting involved in solving what is essentially an information and engineering problem, using methods that have been tried in digital computer circuits.